This post was originally published by Raise Your Hand Texas.

Over the next 3 years, the 5 Raising Blended Learners demonstration sites—plus the 15 sites in the Pilot Network—will dramatically change how schools design and deliver education. These sites will soar and they will struggle, they will feel the headache of buggy software, but also the elation of watching a student who never liked school suddenly take to learning in a new way. Along this journey, these sites may find kinship with others nationwide who have experimented with blended learning, but they will also test new approaches, navigate new obstacles, and learn new lessons unique to Texas and the contexts of their own communities.

The pace of change and range of possibilities are what make blended learning so exciting. And we know that a few years from now, as with other innovations, these Raising Blended Learners sites will look quite different than they do today. Amidst this evolution lies a simple but challenging question: How do we evaluate if this effort is successful?

Typically in education, we might respond by dividing students or schools into groups and comparing their progress on test scores or other standard indicators over a set period of time. But this gets complicated when you consider that a school might cycle through a few different blended learning models over several years, or even shift approaches within the same school year.

Are ensuing gains or losses mainly due to the fourth iteration of the blended learning model or the third?

And what if the second model actually worked best, but only for a small subpopulation of students?

In both cases, the insight hides inside the average. Furthermore, blended learning is rarely the only thing changing at a school; trying to attribute impact to blended learning while a school also has a new principal, a new curriculum, etc., can lead to unreliable data and questionable conclusions.

Faced with such a dynamic, complex environment, what’s an evaluator to do? To be clear, we are not saying controlled experiments don’t matter or there’s no place in education for randomized control trials. There is. It’s just that in the early stages of blended learning, we need approaches to evaluation matching the pace of change in these new schools.*

With the Raising Blended Learners initiative, FSG is undertaking one such approach to evaluation—developmental evaluation—that draws from the fields of complexity science and social innovation but is relatively new to education. Developmental evaluation, a concept popularized by the well-known evaluator Michael Quinn Patton (among others), is uniquely suited to assessing an innovation like blended learning, as it unfolds. Developmental evaluation is not about passing judgment on whether something “works.” Rather, it seeks to understand new models as they are built, observing intended and unintended consequences, and using rapid feedback loops and iterative data to learn and improve over time.

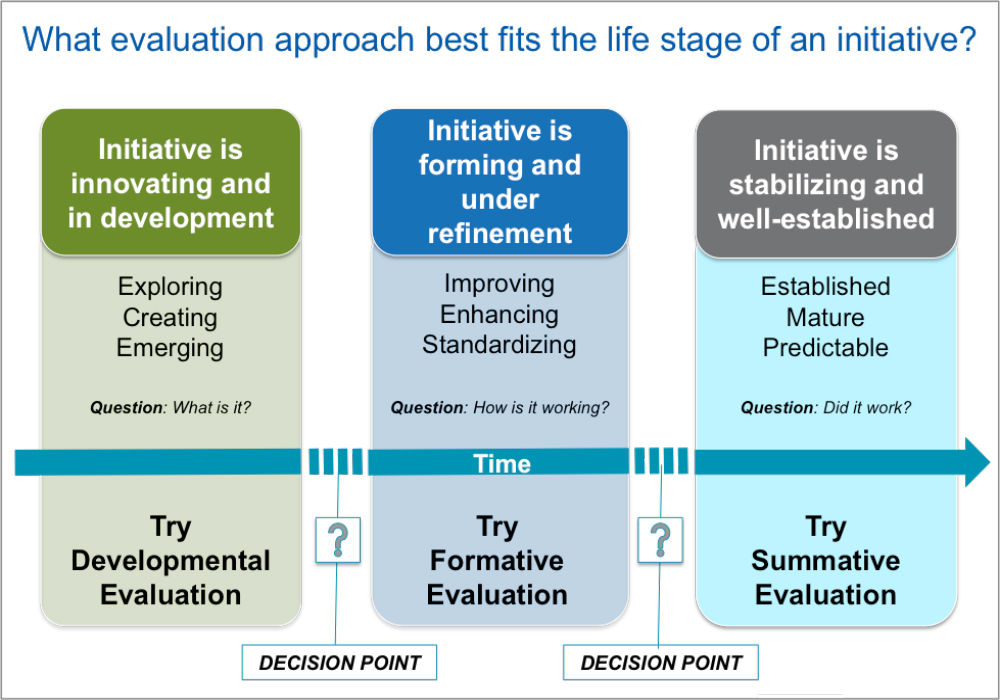

Developmental evaluation is one form of evaluation; as the graphic makes clear, developmental evaluation is best suited to the early stages of an innovation, and can cycle into more traditional formative and summative approaches to evaluation over time.

So how might a developmental approach to Raising Blended Learners look?

For starters, in Year 1 of the initiative (when sites are just starting to implement blended learning), we’ll focus on defining what is emerging, and understanding how different stakeholders—students, parents, teachers, and leaders—are experiencing both the good and bad of blended learning. We’ll also help the sites define success based on their own contexts and needs, then play back observations and real-time data to inform ongoing improvement. Our hope is that a developmental approach will help schools preserve the space to innovate and try new things without the rush to judgement too often present in evaluation.

By documenting what’s emerging in blended learning’s early stages, we’ll also shine a light on the potential steps other schools in Texas (particularly district schools) can take on their own blended learning journeys.

As Raising Blended Learners evolves from 2016-2019, our evaluation approach will begin to integrate more formative and, eventually, more summative components. We’ll maintain a sharp focus on feedback loops and continuous improvement, but also look for signs of where implementation appears promising, and where it seems off track.

The end result will be a mixture of multi-year quantitative and qualitative data that helps us understand the conditions, circumstances, and contextual situations under which blended learning is working particularly well, for particular students, and why.

This is a complex answer to the question of whether blended learning is successful, but it matches the reality of blended learning implementation in Texas, and hopefully provides a viable path for spreading good work across the state.

*While developmental evaluation is a promising approach, it’s important to note the recent, thoughtful contributions that others have made toward new ways of conducting evaluation and research among innovative, quickly changing schools. This includes Christensen Institute’s call for a new approach to research, Carnegie Foundation for the Advancement of Teaching’s work on improvement science, and a recent article, Getting “Moneyball” Right in the Social Sector, from Lisbeth Schorr and Srik Gopal.