Evaluation can be a powerful force for change within organizations. As a result of how we assess our efforts, we pay attention to different areas and invest differently. I assume that those reading this post are the sort of people who would change their approach if they knew, for example, that their strategy for reducing crime led to an increase in crime. This is an important assumption, as we do have systems that yield unintentional inverse results.

The FSG report Evaluating Complexity: Propositions for Improving Practice by Hallie Preskill and Srik Gopal provides important direction for thinking about how to approach evaluation in complex settings. One of the core ideas in the report is that we can’t evaluate complex, non-linear phenomena such as we find in nearly all social change settings using linear, reductive, simplistic measures. If we do, the resulting guidance provided by the measures will at best be useless for building judgement about future decisions, and may actually be harmful, making things worse and masking valuable potential insights.

The ability to improve evaluation of complex dynamics is a critical growth area for social and cultural change leaders.

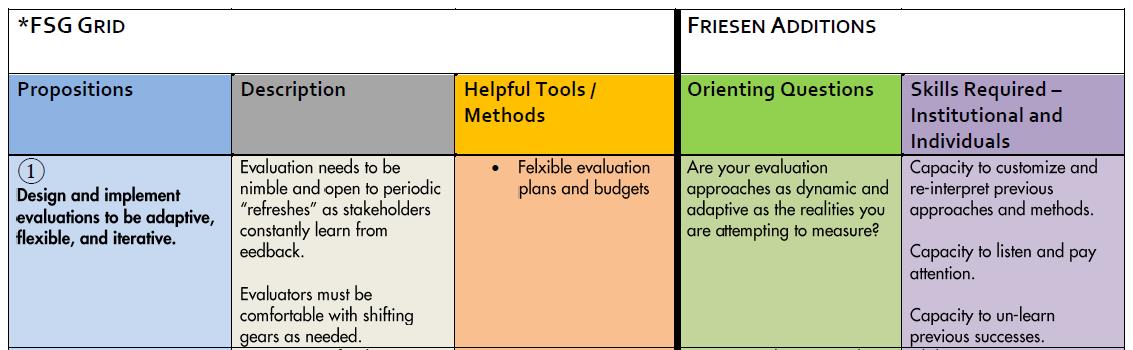

In studying Evaluating Complexity recently, I found myself pondering how the utility of the core ideas could be extended for initiative partners, funders, and those affected by intervention efforts. While riding the bus one morning the idea of adding 2 additional columns to the “Questions and Skills Supplement” chart came to me.

- Orienting questions. Most people, even very intelligent and committed people, tend to enter a catatonic state when discussions of complex systems, complex adaptive systems, and non-linear functions enter the conversation. I have often found that questions help increase the mental traction of a concept, so I added another column with orienting questions for each of the 9 Propositions. For example, Proposition 1 says: “Design and implement evaluations to be adaptive, flexible, and iterative.” In the new Orienting Questions column I added “Are your evaluation approaches as dynamic and adaptive as the realities you are attempting to measure?” Whether you are a project team member, a funder, or someone affected by the changes being pursued, engaging around this question will draw people back into the proposition and provide a safeguard to simplistic or ill-fitted evaluation measures.

- Skills required. This column supplements the original Helpful tools/methods column in the original FSG chart. The intention is to cause reflection on what kinds of capabilities may be needed to undertake the evaluation. Another way to think about it is to consider what will be required to move the questions toward answers. Considering the capability requirements may be useful as a precursor to the Helpful Tools/Methods as it generates clarity about the nature of what is being pursued and what the opportunities and limits in a given setting might be. Following the above example, for Proposition 1, I have suggested t3 required skills:

- Capacity to customize and re-interpret previous approaches and methods;

- Capacity to listen and pay attention;

- Capacity to un-learn previous successes.

These skills can be considered at individual and institutional levels. In my experience, it is easier to get people to agree to a need for change than it is to keep them moving when their own limits and discomforts slow down those needed changes. This is also true of organizations. Many people have a difficult time re-interpreting what they know, listening, and un-learning. If we know these skills are required, conversations around these ideas can be more carefully designed into the process of evaluating complexity. This will improve our ability to navigate the resistance together.

While the above additions are initial sketches, I have found them useful in drawing me back into consideration of the core propositions for evaluating complexity. The “Questions and Skills Supplement” provides evaluators and service delivery teams and leaders additional leverage points for moving the assessment process into more fruitful terrain. There are doubtless other considerations that could build on the great start that the FSG Evaluating Complexity proposal makes.

“Questions and Skills Supplement” with 2 new additions.

What other key considerations would you add when thinking about evaluating complexity?

Milton Friesen is the Program Director of Social Cities and a Senior Fellow at Cardus, a public policy think tank. He is nearing completion of a Ph.D. at the University of Waterloo, School of Planning.